besides games

Yeah, same here. I haven’t pirated games since I was a broke university student. There’s simply no need to when digital storefronts make it easy to get the games I want in the format I want. Some even offer DRM-free offline backups, or in the case of Steam the games stay in my library even if the publisher decides to remove the title from the Steam storefront.

TV and movies are completely different from this, and so much worse. So many different streaming services, some with intrusive ads, and every one wanting their own monthly subscription. I shouldn’t need to search “where is X streaming.” Ever. Titles disappear from these services all the time. Even if you “buy” a digital movie or show, the rights holder can yank it back from you because… reasons?

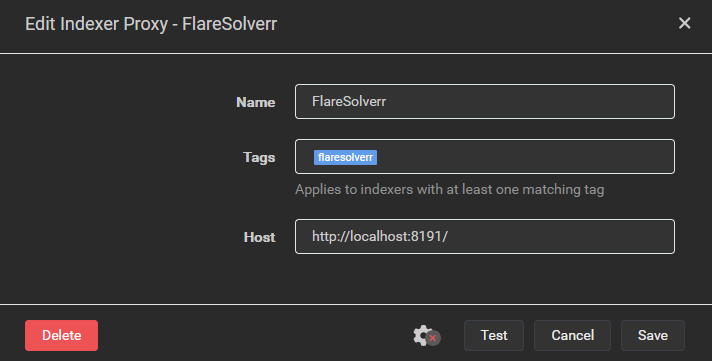

TV and movie distribution is such a garbage deal for consumers that open source developers have created a complete software stack (the servarr stack) to automate the process of finding and downloading media. Once you get it set up, it’s about million times more convenient than corporate streaming services.

TL;DR: Getting digital games is easy and feels like a fair deal for the average consumer. Getting movies and TV shows is a pain in the ass and feels like an absolute shit deal for the consumer. I’ll continue to pirate movies and TV shows because as Gabe Newell famously argued, piracy indicates a service problem.

There is an option to pay for Extended Security Update (ESU) support for Windows 10. It’ll give you access to critical security and Windows Defender antivirus updates, but no fixes or updates to features. There are three ways to pay:

The program would conceivably allow you to kick the can down the road, possibly as far as Oct. 2028. Personally, I opted instead to switch to Linux months ago instead, and don’t regret my choice.